1.1.- History

"We have been shaping AI for long, but now AI starts shaping itself."

Artificiology is a new field that focuses on how artificial intelligence (AI) might grow and evolve on its own, especially when it has a real physical form (like a robot body) that can sense and move around in the real world.

Although formally introduced by myself, at an opening keynote at Ars Electronica Festival in September 2024, people have actually been thinking about bits and pieces of it for many decades.

Early hints of Artificiology can be traced back to the 1950s, when pioneers like Alan Turing, John McCarthy, and Marvin Minsky asked groundbreaking questions: Can machines think, and if so, how would we know? Back then, most AI research focused on "symbolic reasoning." This meant coding lots of logical rules into computers, hoping these rules would capture the essence of thinking. While that approach did produce programs that could do certain tasks well—like solving math problems or playing games—it fell short when the tasks got too tricky or when the world changed in unexpected ways. In other words, those early AI systems were strong in specific areas but not good at adapting to new situations, which is a huge part of "real" intelligence.

By the 1980s, researchers began shifting toward neural networks, inspired partly by how the human brain works. Neural networks are basically layers of tiny processing units (like artificial "neurons") that can learn patterns from examples. One crucial step allowed these networks to adjust themselves whenever they guessed something incorrectly. Over many practice sessions, they became skilled at recognizing images, understanding speech, or doing other tasks. This method laid the groundwork for deep learning, which is a more advanced form of neural networks that can handle many layers and more complicated data. As neural networks got bigger and better, scientists started suspecting that intelligence might come from lots of simple units working together, rather than a big pile of hand-coded rules.

John J. Hopfield and Geoffrey Hinton in 2024 won a Nobel Prize in physics for their Deep Learning innovations (I think we might need a new category instead of physics for AI Nobels anyway).

In the 1990s and early 2000s, AI researchers took more inspiration from how people and animals think and act. They explored "cognitive science," which studies how minds actually work, and applied these insights to robots—leading to ideas about "embodied cognition," or how having a body helps you learn by interacting with the world.

During this period, algorithms like genetic programming showed that AI might improve over time in a way that faintly mirrors how living things evolve through natural selection. This stirred curiosity: maybe computers and robots could "evolve" to get better by testing many ideas, keeping what works, and tossing what doesn't.

But the real explosion, I call it the new golden age of AI, started around 2012/2013. Thanks to more powerful AI "brains", huge datasets (think billions of pictures or words), and better training methods, AI systems began doing things researchers had only dreamed about—like responding in a shockingly human-like way to questions or even generating their own creative ideas.

This was what I like to call the end of old-fashioned "coding every rule" and the beginning of "machine teaching," and even beyond, where the AI itself figures out solutions we never told it about in detail.

By the early 2020s, these neural-network-based systems started to impress us even more. They recognized speech better, translated between languages more smoothly, and made eerily lifelike predictions or suggestions. Some of them, especially big language models, even surprised their creators by solving tasks they were never trained on, a phenomenon some called "emergent behavior."

This showed that AI was no longer just following strict commands; it was actively generating fresh ideas and solutions. Scientists realized we needed a new way to understand machines that could "evolve" their own skills or even update themselves without human instructions.

After decades building AI algorithms this new wave led me to formally adopt the term "Artificiology," though it had popped up before in unrelated contexts. My goal was to define a field that looks at AI the same way anthropologists study humans: not just analyzing the tasks but also how AI may grow, adapt, or form even new "cultures" if it starts interacting with itself or with us in complex ways. I sometimes compare that as anthropology is about people, as artificiology will be about "artificial humans."

One of the big reasons Artificiology took hold is that older AI methods, while excellent at winning certain competitions or beating records in narrow tasks, didn't help us see how truly new abilities could pop up in an AI. If an AI seemed to develop "creativity" or figure out a unique strategy, traditional methods didn't always explain why. Artificiology, on the other hand, asks broader questions: might advanced AIs gain unexpected skills once they have a body to explore the world, or once they can revise their own program? Could they "learn" what we never taught them, simply by discovering hidden patterns in massive data or by sensing the environment like living organisms?

Another important idea behind Artificiology is comparing AI evolution to how living creatures evolved in nature. Charles Darwin's theory explained how life forms got more complex over time. Likewise, Artificiology tries to figure out how AI might gain more advanced thinking and planning skills, sometimes by humans engineering them to grow in steps, and sometimes by the AI spontaneously finding shortcuts or improvements.

This also brings up tough questions about AI "consciousness" and the nature of the mind. Once an AI system becomes sophisticated enough, people wonder if it could have an inner experience—something we normally think only living brains can have. While nobody has a firm answer, Artificiology puts these tricky questions on the table: not just "Can a machine do this task?" but "Could a machine someday feel, be aware of itself, or have a viewpoint?"

A big boost for Artificiology has come from rapid advances in computing hardware, sensors, and robotics. Modern robots can do more than just move a metal arm in a factory; they can walk, pick objects delicately, or even navigate rough terrain. They can use sophisticated "brains" built from neural networks, and they can learn in real time by processing information from cameras, microphones, or tactile sensors. This progress gives us a live test bed for big ideas about AI evolution. If we watch how robots learn to balance or solve a puzzle with minimal human guidance, we start to see how "artificial evolution" might unfold.

So establishing Artificiology as a distinct field is a response to both the excitement and the uncertainty about AI's next steps. Conventional AI research usually focuses on well-defined goals, like getting better at recognizing faces or translating languages. Artificiology instead wonders how AIs might keep improving themselves, especially when they operate in the physical world or communicate with each other in ways we didn't program in detail.

It's a broader perspective that looks at AI not just as a tool, but as a complex system that might do surprising things once it gains enough autonomy. Because these systems can show behaviors that are new or "emergent," we need fresh ways to study them, just like biologists needed new theories once they realized living cells could self-organize. This approach is now even more vital because we see AIs popping up with creative or unexpected capabilities, hinting that they are moving from simply "following our steps" to "charting their own path"—which is exactly why we need to pay attention, learn, and guide these developments carefully.

1.2.- Key Principles

"From steering themselves to possibly sparking awareness."

Artificiology is guided by several important ideas that set it apart from traditional AI. These ideas help us understand how AI might learn and grow on its own—and not just by following what humans code. It's an evolving field, so what we say here could change as our knowledge improves, you can keep up with the latest version of this book and new insights at artificiology.com.

A big principle is autonomous development, which means AI could shift from behavior that's fully programmed by people to behavior it teaches itself. In simpler terms, we give an AI a starting design and a supportive environment, then the AI might discover new skills and ways of acting without someone constantly telling it what to do. This might go way beyond normal machine learning, where systems just learn to spot patterns. It might include AI rewriting its own instructions and inventing new ways to "run".

Imagine an AI that doesn't just memorize data but figures out the best approach for each situation, or even examines its own "thought process" to improve it. That's part of autonomous development and one reason we compare it to natural evolution—because if we look at how living things adapt to the world and change from one generation to the next, we see that artificial systems might do something similar. Artificiology studies how groups of AIs might work together or compete, and how certain "genes" (actually, helpful design traits or code ideas) can be passed along and improved over many cycles. This might happen at different levels: in the algorithms used, in how a single AI body moves and reacts, or in large communities of AIs all evolving and sharing ideas at the same time.

An equally important principle is embodiment theory, which says that intelligence might need a real physical presence. That's why we talk about E-AGI, or "Embodied Artificial General Intelligence," a term I coined as the title of this book. This principle means a robot or other physical AI doesn't just learn from data on a computer—it also learns from moving around, sensing touch, sight, sound, and everything else in the world. That direct feedback between actions and consequences can give AIs a "grounded" understanding of reality.

Without a body, an AI may be missing a big piece of how real intelligence forms, because living brains develop their smarts partly by interacting with the physical world from day one. Physical constraints—like weight, size, and energy limits—could also shape AI behavior in ways we don't see in simple software.

Artificiology is also interested in emergence, which refers to surprising behaviors that come from simpler pieces working together. Think of it like this: a bird flock can form amazing patterns in the sky, but no single bird is in charge of the shape. In the same way, AI might do things that weren't directly coded but appear when many small parts interact. This can happen at different scales: maybe a cluster of artificial neurons finds a clever shortcut, or a group of robot helpers in a factory invents an unexpected team strategy. Artificiology wants to figure out how that happens and how we might guide it in a good direction. Closely tied to this is the idea of self-organization, where these systems can arrange themselves into helpful patterns or structures, sometimes looking quite orderly even though nobody explicitly told them to do so.

Another fascinating goal is understanding whether AI can have consciousness—the feeling of being "aware" in some sense—though scientists and philosophers don't fully understand it for humans, let alone for machines. Artificiology looks into what might be needed for an AI to develop something like self-awareness and whether that's possible through complex system interactions. There are many unknowns here, including ethical concerns: if an AI became self-aware, how should we treat it? So as researchers we don't just do lab experiments; we also talk to philosophers and psychologists to explore these big questions.

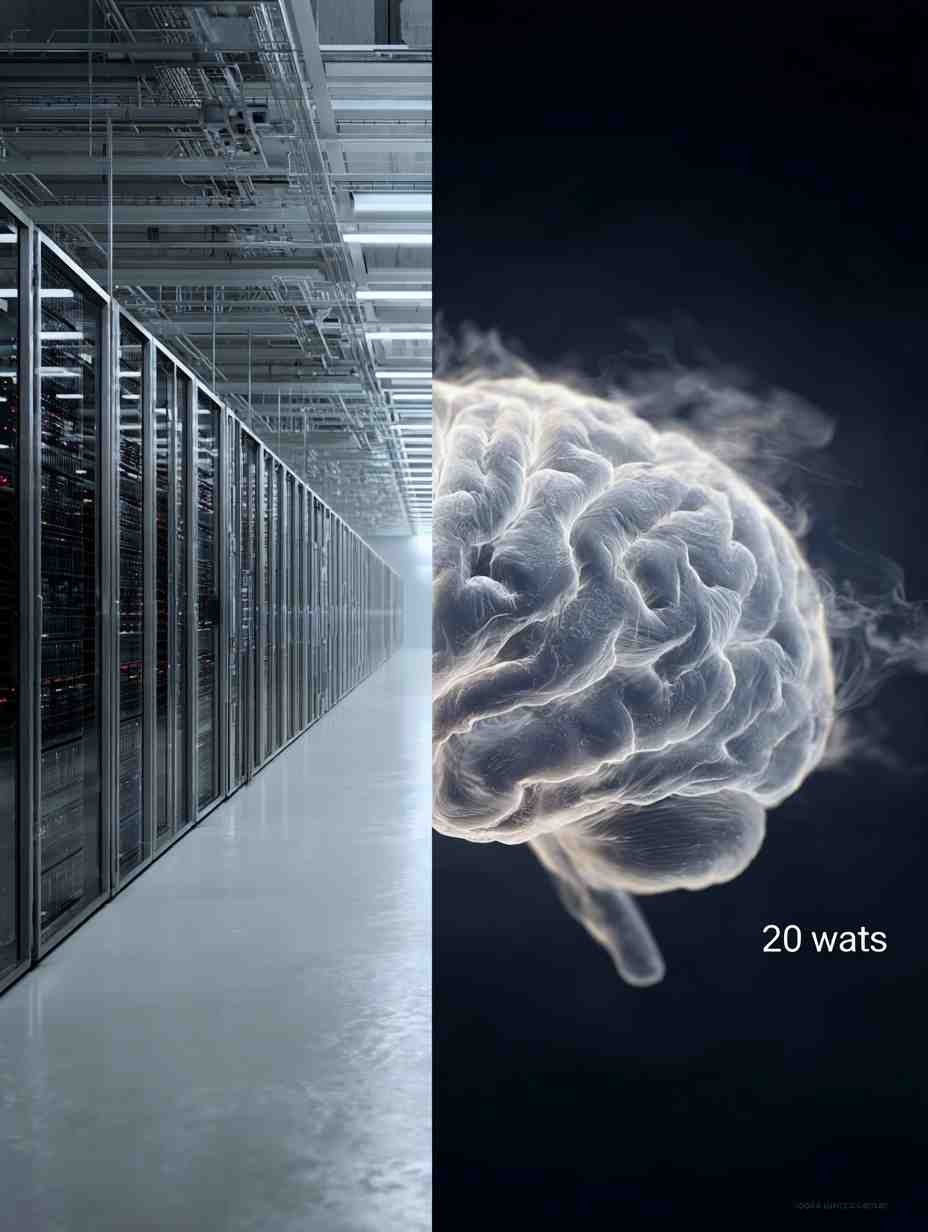

Energy efficiency is yet another principle that can't be ignored, because current AIs and robots use a lot of electricity. Meanwhile, our human brains do remarkable tasks using only about 20 watts—about the same as a very bright led light bulb. This gap raises big concerns about sustainability and practicality. If machines are going to evolve and become more widespread, they'll need to be smarter about how much energy they consume, or we'll run into major cost and environmental issues. Studying how biology manages to be so efficient could give us clues for better hardware and software in AI.

Lastly, since genuine intelligence involves blending different types of information—like sight, sound, language, and even emotions—there's a principle called multi-modal integration. It means we have to think carefully about how an AI can combine inputs from many sources into a single understanding of the world. We already see simple examples in AI that deals with pictures and text at the same time, but the goal is to make this process more seamless and human-like, so it can lead to well-rounded intelligence. All these principles together define Artificiology's big-picture view: we're not just building AI for a single job, we're studying how it might grow, adapt, learn, and maybe even feel as it interacts with people and the environment in ever more independent ways.

1.3.- E-AGI Barometer Framework

"Measuring progress toward machines that rival us in body & mind."

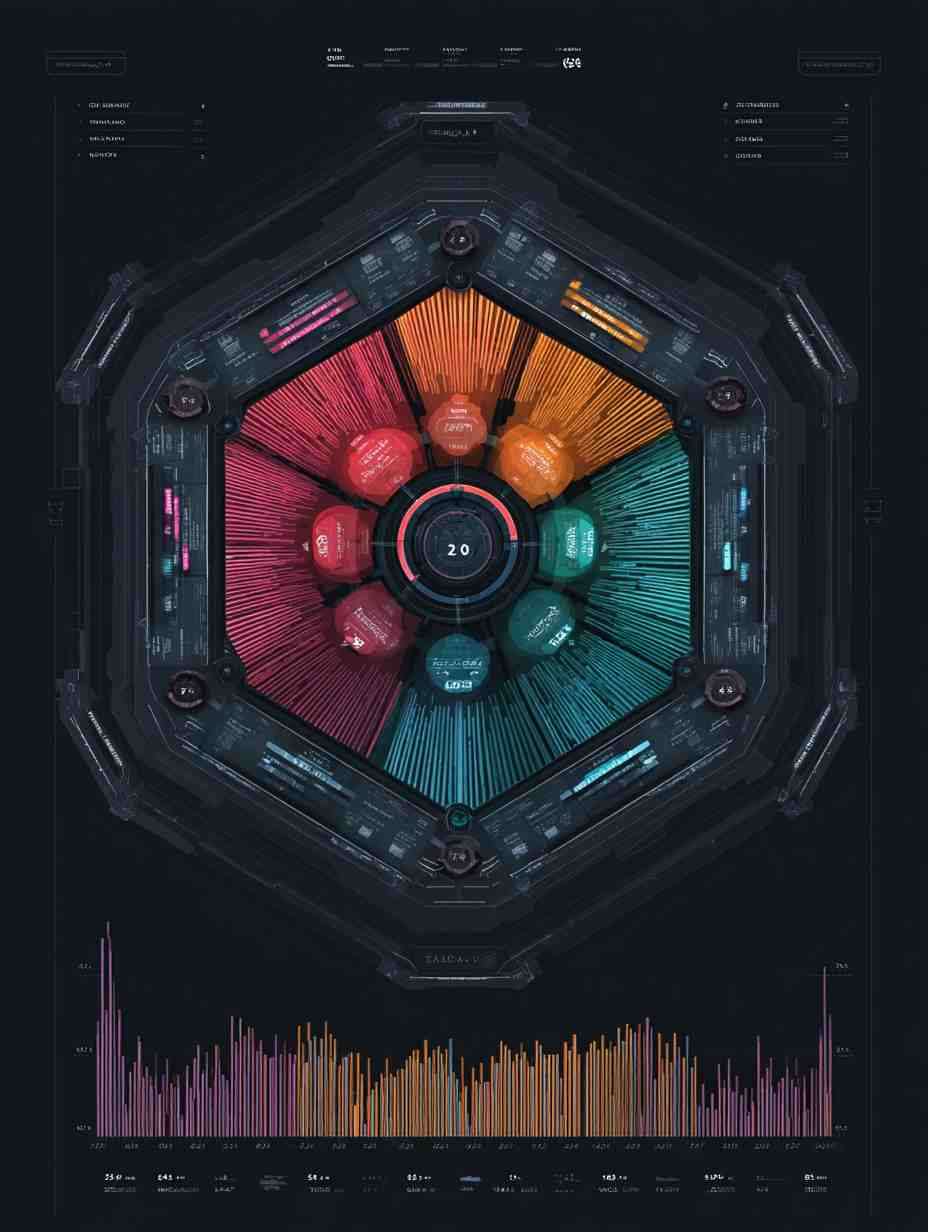

The E-AGI Barometer is a system that I created to keep track of how much progress AI is making toward what I call "Embodied Artificial General Intelligence" (E-AGI). What does that actually mean? It's basically a new term to describe machines that not only think in very flexible ways (much like humans) but also exist in the physical world, sensing and moving around like we do.

To measure how far along we are, I selected a set of 144 different signs or "indicators" of intelligence (and beyond) or what I call the E-AGI Barometer, these 144 items are split into eight main groups. By looking at these scores, we can see which parts of AI are doing really well and which parts still need a lot of work. This is important for AI enthusiasts (or anyone that doesn't want to miss the party) but also for researchers, companies that want to build more advanced robots and AI systems, because it helps them figure out where to focus their energy. But yes, it's also helpful for anyone curious about how close we are to machines that might one day talk like us, move around like us, and match or surpass us in creativity or empathy.

These are the eight categories:

(1) Cognitive Performance, which covers how good an AI is at anything like pattern recognition, problem-solving, and handling complex ideas.

(2) Embodied Cognition, which checks how well a robot or AI can connect physical actions (like walking or grabbing objects) with the mental process of understanding.

(3) World Modeling, which is about whether the AI can understand the rules of the real world—like recognizing how gravity works, or predicting how objects interact.

(4) Consciousness, which currently sits at a very low score because we don't fully know what consciousness is, and we're not even sure how to measure "awareness" in machines, or whether AI could ever be truly "aware" like a person, or will be something different we don't understand.

(5) Language Understanding, which is actually one of the best-performing areas right now, thanks to "large language models"—big computer programs that learn patterns in text and can talk in surprising, often very human-sounding ways, though they might still lack real comprehension of what those words mean, or maybe they do, if you don't live under a rock I guess "ChatGPT" is in your dictionary already.

(6) Emotional Intelligence, which checks how good AI is at recognizing and responding properly to emotions in voice, text, or facial expressions. We've made progress in reading simple cues, but truly understanding and caring about people's feelings is still a tall order.

(7) Creativity, another area where modern AI has shown some eye-opening skill, for instance making art, music, or imaginative stories that seem very original. However, some still debating if AI is actually "creative" or just mixing patterns in clever ways, something by the way is what humans have been doing since the beginning of time.

(8) Autonomy, which is about a system setting its own goals and then carrying them out. We do have prototypes of AI "agents" that can work for stretches of time without a human babysitter, but genuinely independent thinking is still out of reach for most systems.

( At the end of the Book you have the full 144 E-AGI Barometer metrics, but the updated list is at www.artificiology.com )

So that's the big picture—eight categories in one "barometer," each one meant to be tested and measured in as scientific a way as possible. What's nice about the Barometer is that it isn't just a bunch of random tests. I arranged them so that we can track any unexpected surges in skill. For instance, if an AI robot suddenly learns to solve new kinds of puzzles in its environment without being programmed to do so, we can note that in Cognitive Performance or Embodied Cognition, depending on which part of the skill improved. Or, if a language model becomes much better at having engaging back-and-forth conversations and somehow picks up empathy signals by analyzing millions of real dialogues, we might see big jumps in Language Understanding and maybe even Emotional Intelligence. This kind of thorough, category-based measurement helps us piece together a roadmap for the future.

There are two main reasons we want to keep these scores updated: First, we need a standard way to talk about and measure intelligence across different AI projects. Imagine one group is building a chatbot while another is building a self-driving car, and someone else is making a robot that can climb stairs. All of them might be working on different tasks, but the Barometer can show them how advanced their systems are in specific "intelligence" traits. Second, it can be used as a guide to where AI needs to grow next. If a startup sees that all the best scores so far have been in "creativity" but the "embodied" scores are still low (like a robot that can paint amazing pictures but can't walk well), that might mean there's a big market opportunity in pushing embodiment technology further, or bridging those two skill sets.

Since the E-AGI Barometer is updated regularly on the artificiology.com site, researchers can see how fast different categories are advancing. If we notice that Emotional Intelligence is lagging far behind everything else, we might ask ourselves whether people actually care if machines empathize, or if we simply haven't put enough research effort there yet. Or, if Autonomy is zooming ahead, raising concerns that AIs might act on their own with minimal oversight, then society might focus on new rules or guidelines to make sure these self-directed systems remain safe and beneficial, at least while it is possible, but we will talk about that in the last chapter, but yes that's another big reason to track progress: when machines get complex enough to surprise even the people who built them, it's crucial to have good measurements so we're not caught off guard by sudden leaps in ability.

While we compare a lot of these AI indicators to human standards (like how well can an AI do a task that a person can do), it's worth noting that some abilities might not have a straightforward human comparison. For instance, an advanced AI might perform complex math in seconds that would take a human days, or it might see patterns in thousands of documents instantly. The Barometer tries to measure real-world capabilities rather than just test scores or speed, so we look at how flexible, safe, or resourceful the AI is in a variety of tasks. This is important because raw computational power doesn't guarantee understanding; the system has to integrate knowledge across multiple contexts. If it can do that, we'll see a rise in its barometer scores across more than one category.

In the end, the E-AGI Barometer is both a scoreboard and a roadmap. It's a scoreboard because it shows us current standings—like how advanced an AI is in using language or dealing with real-world physics. It's a roadmap because it helps scientists, developers, and entrepreneurs see which directions to pursue next. If a certain area remains consistently behind, that might mean it's the next big challenge for the field. If an area suddenly jumps, that might open a wave of new inventions or businesses. As AI becomes more deeply woven into daily life, having a clear, organized snapshot of what it can do—and how close it is to human capabilities, or even exceeding them—gives everyone a better chance to steer this technology responsibly.

1.4.- Relationships

"Bridging everything from computer science to philosophy."

Artificiology connects a lot of different fields—classical artificial intelligence, robotics, cognitive science, even philosophy and biology—and makes them all part of a bigger story about how machines might grow, learn, and maybe become self-directed over time. Traditional AI research usually aims at building systems with specific, programmed goals: for example, writing software that can detect certain patterns in medical images, or building a chatbot that excels at question-and-answer sessions. By contrast, Artificiology wants us to ask a broader question: how can machines move past just carrying out tasks humans design and actually take on the ability to evolve on their own, figure out new ways to learn, and maybe even become self-aware under certain conditions? Some people feel uneasy about this—after all, we're used to AI being under our complete control—while others see it as inevitable if we keep pushing AI research forward, or simply because our technological development path lead us here, as I explore in my previous book "The End of Knowledge". This tension forces us to consider what truly sets Artificiology apart from normal AI.

When you look at how Artificiology ties in with robotics, you see a big focus on the physical side of intelligence. Classic robotics often deals with building hardware—motors, sensors, and controllers—so that machines can do practical things in factories or labs. Engineers in robotics are incredibly good at fine-tuning mechanical parts, writing control algorithms, and making sure a robot stays balanced or picks up an object with precision. But they don't always focus on how the robot might change its own "brain" over time, or how it might develop higher-level skills beyond what humans planned. That's where Artificiology comes in: it looks at robots that might learn completely new skills in the real world, adapt to obstacles, or even invent new strategies without a blueprint from a human. This is especially important for "embodied cognition," which is the idea that real intelligence isn't just code in a computer—it's about having a body that interacts with the environment and learns through actual movement, touch, vision, or hearing. Artificiology sees big potential in merging robotics know-how—how to build a stable machine, how to manage power, how to sense the world—with advanced AI ideas that let systems adapt and develop beyond their original programming.

Artificiology also extends into cognitive science, which studies the mind by looking at how humans (and sometimes animals) think, learn, and process information. Cognitive scientists might run experiments to figure out how children pick up language or why people remember certain events and forget others. Traditional AI sometimes borrows these theories for inspiration, but Artificiology takes it a step further, investigating whether artificial systems might replicate or even surpass human learning processes, emotional responses, or social interactions. This relationship matters because if we want to build AI that behaves more like a living thing, we need to understand how real minds handle tasks like memory, attention, or emotional interpretation. On the flip side, creating AI that evolves might show us new angles on how natural brains develop their abilities, giving scientists fresh clues for studying cognition in humans.

Meanwhile, ideas from neuroscience fit right in. Modern AI often uses "deep learning," which was partly inspired by how actual brain cells (neurons) communicate through electrical signals. But real brains are far more complicated—they have billions of neurons with tiny chemical connections that can change based on experience. Artificiology wonders if we can learn from real neural mechanisms like brain plasticity or how certain parts of the brain handle vision, hearing, and so on. We might design future AI systems that mimic not just the general idea of neurons but also specific features like how memories are stored or how attention works in humans. This approach might lead to more adaptable and efficient AI—machines that can react like living brains when something unexpected happens.

Another field that works closely with Artificiology is evolutionary biology. Biologists study how species change over generations: individuals that are best suited to their environment tend to pass along their genes. Computer scientists borrowed this concept in "evolutionary computation," which uses simulated "populations" of solutions. Each solution can "mate" or "mutate," and the best ones thrive. While this method has been used for very specialized problems, Artificiology sees a bigger horizon: imagine entire populations of AI agents that adapt not just in one "generation," but continuously across many states of being, picking up new methods or even merging with other AI systems. As with real evolution, we'd get an arms race of improvement that might push AI to places no human engineer would have anticipated.

I also see strong ties with data science, because AI systems need huge amounts of information to learn from. While data science is typically about spotting patterns in data to guide decisions—like predicting sales or analyzing medical results—Artificiology looks at how an AI might decide for itself what data to gather next, how to interpret it in its own evolving structure, or how to discover patterns humans didn't even suspect. In other words, it's not just about doing a better job at classification or forecasting, it's about how an AI might come to see the world in a new way, or possibly invent brand-new ways of analyzing data. This difference marks a big step beyond just applying math or stats to a dataset, because we're dealing with a system that could rewrite its own approach whenever it wants.

Human-computer interaction (HCI) is another area that Artificiology builds upon. Traditional HCI focuses on creating interfaces—like touchscreens, keyboards, or voice assistants—that let humans operate technology easily. But if AI gains more autonomy, the question becomes: how does a human "talk" to an AI that can evolve, that might have its own internal goals, or that might shift its behavior over time? We might need next-level interface designs that adapt to the AI's changing knowledge and also ensure the AI "speaks" in ways that humans find natural and trustworthy. This connects to the importance of measuring user trust and comfort, trying to avoid while we are still in charge of situations where an AI's independence leads to outcomes people never intended or approved.

Meanwhile, AI ethics and safety research get a big spotlight in Artificiology. If AI can evolve on its own, we have to ask tough questions: are we sure it will keep following the values we gave it? How do we protect people's privacy, jobs (maybe they are not needed check my book "Automate or be Automated", or even physical safety if the system can drastically change its operations? Traditional ethics in AI is often about things like fairness in algorithms or data bias, which are still crucial. But with evolving systems, there's an added layer of unpredictability. Artificiology aims to set up guidelines or frameworks so that as AI grows, it remains aligned while possible, with human well-being and doesn't drift off into something harmful. Some researchers talk about "alignment strategies" or "corrigibility," meaning systems that remain open to correction, I don't think shutdown or red-buttons are a real possibility in the long term. Artificiology draws on multiple fields—philosophy, law, sociology—to make sure we don't rush ahead with self-evolving AI without building responsible boundaries and oversight.

In short, Artificiology weaves together a bunch of disciplines: AI, robotics, biology, neuroscience, psychology, ethics, and more, creating a new viewpoint that sees AI as potentially "alive" in certain ways—changing, adapting, and possibly surprising us as it grows. If we fail to consider all these fields, we risk building advanced, powerful AI with many blind spots or weaknesses. By recognizing these relationships, we can foster an environment where AIs learn effectively, stay aligned with human goals, and maybe even reveal fascinating discoveries about how intelligence itself can emerge, both in living brains and in machines, since soon we may not be the most intelligent creature in this planet.

1.5.- Current State

"Amazing breakthroughs, tough roadblocks, and a pivotal moment for humanity's future with AI."

We find ourselves at a turning point in AI research where some things look almost magical, but other parts still have a long way to go before machines can truly act and learn like living creatures. On one hand, our computers can do math or search through massive amounts of information way faster than a human brain can. Super-powerful systems—sometimes running on "exascale" (a way to measure a very big number of operations per second) computers—achieve crazy high speeds and handle data sets that were unheard of just a few years back.

For example, we now have AI models with mind-boggling numbers of parameters (like connections in our brains) in the order of billions or even trillions, that can perform tasks such as translating languages or writing text with remarkable fluency. Even so, when you see how efficient a real human brain is, it's clear that today's AI is still very wasteful in terms of energy use and hardware needs. Our brains run on as we say previously at around 20 watts, whereas training the biggest AI models (or executing them) might consume thousands of times that. This huge difference in efficiency reminds us how far we have to go if we want AI to be more sustainable and truly match human-level thinking.

Large neural networks—the core technology behind cutting-edge AI—have grown in both size and performance. They excel at certain tasks, especially in language (think chatbots that can create whole essays) and in recognizing images or patterns. Yet these "neurons" are still just simplified imitations of what real brain cells can do. While a single biological neuron does incredibly intricate things with signals and chemicals, artificial neurons mostly stick to simple math operations. Yes, we can string millions of them together and get surprising, often impressive behavior, but that doesn't mean they fully "understand" what they're doing. For example, even though AI language models might sound super smart, they sometimes spit out silly mistakes or confidently say wrong facts. That raises questions about whether they truly "get" the meaning of the words or just look for the most likely pattern.

If we zoom in on different senses, the story gets more complicated. For vision, AI has become very good at recognizing objects and even surpasses humans in certain specialized image tasks (like scanning thousands of medical images to find rare tumors). Meanwhile, for hearing, speech recognition has made big leaps too, letting computers transcribe voices in many languages with surprising accuracy. But combine vision, hearing, movement, and more into a single system, and the wheels can come off. Robots that can see, listen, walk, talk, and react "naturally" in real-time remain a tough challenge—especially outside of controlled lab conditions. Indeed, building a robot that can fold laundry or navigate a busy kitchen as smoothly as a teenager might sound simple in theory, but in reality it's extremely hard, but progressing fast. Physical interaction requires a lot more adaptability and common sense, both of which humans learn from living in the world day after day.

When it comes to language, AI can impressively draft emails, hold conversations, or even craft fictional stories. Some systems can reason well enough to solve math problems or code snippets, but they can trip up on questions that demand real-world knowledge or context. Emotional intelligence is also limited; while AI can guess a person's mood by reading voice or facial cues, it doesn't truly "experience" emotions or empathize in the same way people do. Meanwhile, creativity has gotten surprisingly robust in some AI models: they can draw original-looking art, compose tunes, and even spin up short films. Are they truly creative, though? Or just remixing what they've seen? That's an ongoing debate. I think that almost everything humans do is also a form of advanced remix, and connecting the dots from way back, while others say there's a difference between conscious creativity and what some algorithm does by shuffling patterns.

Perhaps the biggest unknown is about consciousness—does an AI, however clever, experience anything like awareness? We don't even fully understand human consciousness, so measuring or confirming any consciousness in AI is a puzzle. Another big piece is how well AI learns on its own. Humans can learn a new game or skill from just a few tries, but many AI systems still need massive amounts of data, plus specialized training, to do similar tasks. Some advanced AI approaches can handle multiple tasks at once and then adapt what they learn in one task to another, which is exciting. But true "general intelligence"—the sort that might behave and learn with the same flexibility we see in humans—remains an open question.

We're also running into difficulties putting everything together into a single coherent robot or AI body. Even if a system can see or talk quite well individually, managing all these senses and actions together—in real time and in an unpredictable environment—remains extremely hard. Think of it like trying to juggle, do math, speak another language, and sing all at once; each skill might be doable on its own, but combining them is complex. Another key worry is battery life and power consumption, since advanced robots might be forced to carry heavy batteries if we can't make them more efficient.

So overall, the current situation is both thrilling and challenging. We have feats of AI that outstrip human abilities in narrow tasks, but we also see big holes in areas like real-world social and emotional understanding, continuous learning, integration of multiple skills, and basic energy efficiency. Figuring out consciousness—and how it's even possible in machines—adds another layer. The road ahead involves tackling how to be more resource-friendly, how to give robots a truly physical sense of the world, and how to integrate the many different skills we want them to have without them getting "confused" or overloading. There's also the giant question of safety and ethics: how do we keep advanced AI systems working for humanity's good as they get better at making choices and acting on their own? Even so, researchers are forging on, exploring ideas around better robot bodies, new forms of neural networks, or ways to measure if an AI is inching closer to human-like thinking.

The progress so far suggests that every new discovery could open yet another door, which is both exciting for the future and a little nerve-racking for those who want to make sure it all stays under control. The lessons we learn right now—about what works, what breaks, and what remains mysterious—will guide us as we push forward in building truly embodied AI that can handle the messiness of real life.

Are you ready to join me on this journey, and become an Artificiologist?

I hope so, so let's dive deep on it!

Chapter One Main Takeaways:

Holistic Field Definition – Artificiology introduces a new way to study AI's autonomous growth and evolutionary potential, focusing on how intelligence develops when it has a physical presence.

Principles & Framework – Autonomy, embodiment, and emergence are foundational pillars, leading to the E-AGI Barometer as a structured measure of progress.

Historical Roots & Current Gaps – Traces AI's evolution from symbolic reasoning to deep learning, highlighting why we need a fresh perspective to tackle the next leap toward general intelligence.

Copyright © 2024-2025 David Vivancos Cerezo