Metric Rational:

Multimodal emotion integration is the capability of an AI or humanoid robot to synthesize emotional cues from multiple sensory channels—such as facial expressions, vocal tonality, body posture, or textual content—into a unified understanding of a user’s emotional state. While a single channel (like facial expressions) may offer clues to happiness or anger, combining it with additional signals (like trembling voice or hunched shoulders) dramatically refines the accuracy of emotional interpretation. Humans naturally merge these modalities, perceiving subtle inconsistencies—like a smiling face but a worried voice—and interpreting them as complex or ambivalent emotions.

For an AI, multimodal emotion integration often involves parallel data streams. One stream could be a camera feed capturing the user’s expressions and posture, another an audio input for vocal tone, and potentially a textual feed for chat messages or transcripts. Each channel has its own noise characteristics: facial recognition might be hampered by low light or partial occlusion, while audio can degrade in a noisy environment. Text might contain emotional keywords but miss paralinguistic nuances. By cross-referencing these cues, the AI can resolve ambiguities—e.g., recognizing genuine happiness if a user’s face and vocal features align, or detecting underlying discomfort if the user’s tone is inconsistent with a neutral facial expression.

Another layer is time-series integration, where the system tracks how expressions, tones, or words evolve over seconds or across conversational turns. A fleeting grimace accompanied by a consistently upbeat vocal pitch might indicate a transient surprise, whereas persistent, discordant cues in voice and posture can suggest deeper conflict or stress. The AI must calibrate these signals according to a user’s baseline behavior—some people naturally speak with a higher pitch, while others might rarely smile. Recognizing these individual differences is crucial to avoiding misinterpretations.

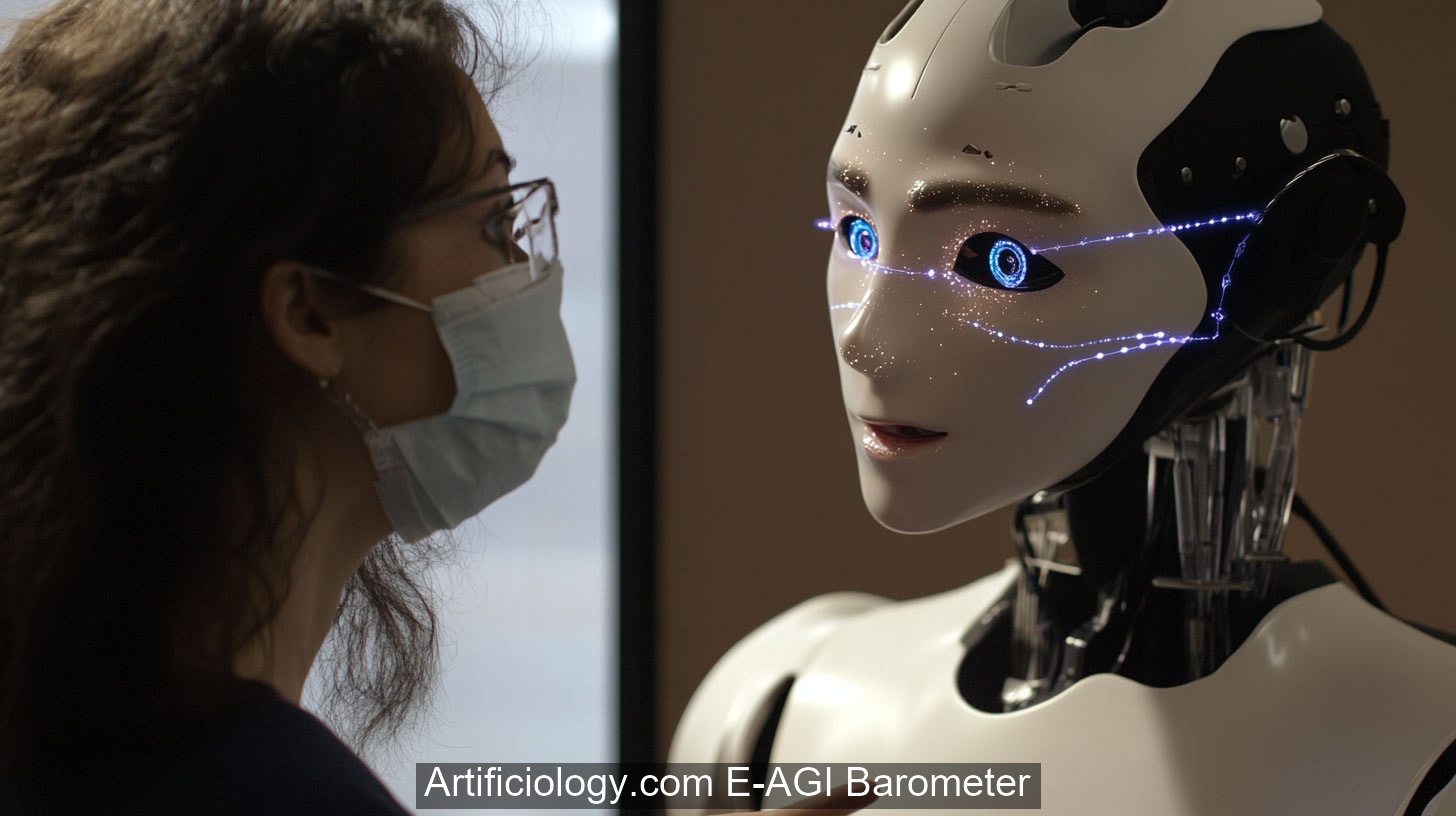

Challenges in multimodal emotion integration include synchronizing data (ensuring that a facial reaction matches the exact moment in the user’s speech), dealing with partial or missing signals (like a user wearing a mask), and confronting cultural or individual variations in emotional display norms. Advanced approaches can use machine learning models trained on annotated datasets of emotion-labeled audio-video clips and textual or physiological data, weighting each channel by reliability in real time. Alternatively, rule-based methods might specify how to combine certain patterns—e.g., elevated pitch plus tense eyebrows suggests agitation.

Evaluation typically involves measuring accuracy in detecting specific emotions (joy, sadness, anger, fear, surprise, etc.) or more nuanced states (sarcasm, boredom). Researchers also examine whether multimodal integration noticeably improves performance over single-channel methods. Another key question is how gracefully the system handles real-world conditions with background noise, shifting lighting, or incomplete user data. Successful implementations show robust detection of complex or mixed emotions and adapt swiftly to user signals, enabling more empathetic, context-sensitive responses.

Ultimately, multimodal emotion integration enables an AI to approximate the human facility for reading subtle social cues. By unifying signals from the face, voice, body language, and possibly textual context, the system gains a deeper, more holistic view of a user’s affective state, supporting genuinely empathetic interactions and more meaningful human-robot communication.

>

>